The key to the success of these methods lies in the rich representations these models build, which are generated after an exhaustive and computationally expensive learning process. Deep learning is starting to become essential to specialized areas of business. Nonetheless, it is not the panacea…

Data has become for many companies their most important asset. Ensuring quality of the information, as well as carrying out a proper management and governance of the data, is absolutely essential.

The Agile Team of Stratio went to different events where they showed and explained the Argos Framework to the Agile Community: AOS (Agile Open Space 2017), BBVAgile Conference and CAS (Conference Agile Spain).

Argos is new Stratio’s framework. It is created to develop independent products which are integrated into a single final product. This unique framework also gives importance to dependencies and to refinements.

This is the second chapter of a three-part series on Evolutionary Feature Selection with Big datasets. We will start where we left off, namely with a review of existing metaheuristics with special focus on Genetic Algorithms.

Spark Streaming is one of the most widely used frameworks for real time processing in the world with Apache Flink, Apache Storm and Kafka Streams. However, when compared to the others, Spark Streaming has more performance problems and its process is through time windows instead of event by event, resulting in delay.

The human brain and our algorithms are hardly alike, as Neuroscience and Deep Learning are quite different disciplines, but some of the concepts still give support to some ideas. In this post, we will talk about one of those ideas: the memory.

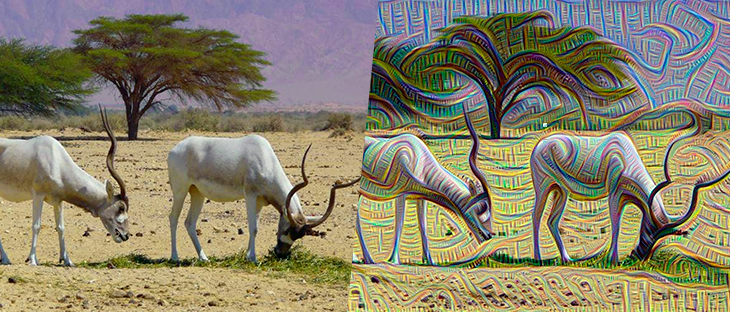

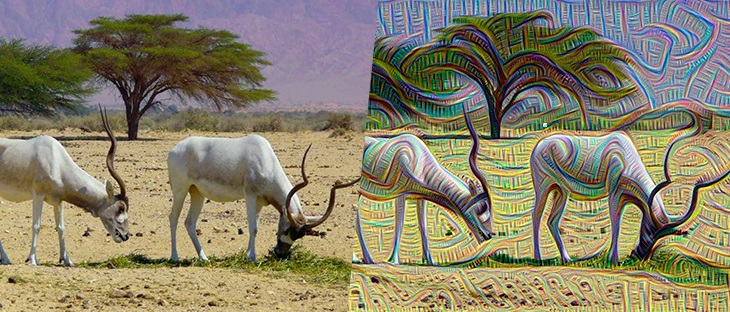

One of the most fascinating ideas about Deep Learning is that each layer gets a data representation focused on the objective of the problem to be solved. So, the network as a whole generates an idea of each concept, derived from data.

Ever felt daunted by a new role? Remember that feeling when you have just started at a new company? As Scrum Master, the feeling is still relatively raw… In today’s modern company, it is common to hear conversations about how to define the main responsibilities of a Scrum Master.

Deep learning applications are now truly amazing, ranging from image detection to natural language processing (for example, automatic translation). It gets even more amazing when Deep Learning becomes unsupervised or is able to generate self-representations of the data.